Scaling is dead, long live Scaling

A perspective on the challenges in self-supervised nucleotide foundation modelling

If you had to name the central dogma of machine learning these days, it’d probably be the bitter lesson, which imparts upon us that models leveraging exponentially falling computational costs invariably outperform models that incorporate human knowledge. This intuition has seen resounding success in the domains of natural language processing and computer vision, where the limiting factor for large language models seems to have become accumulating enough GPUs and finding energy to power them, rather than any modelling breakthroughs.

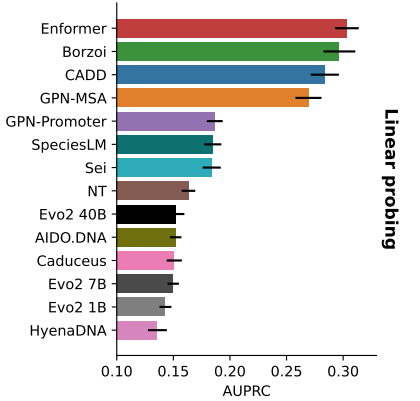

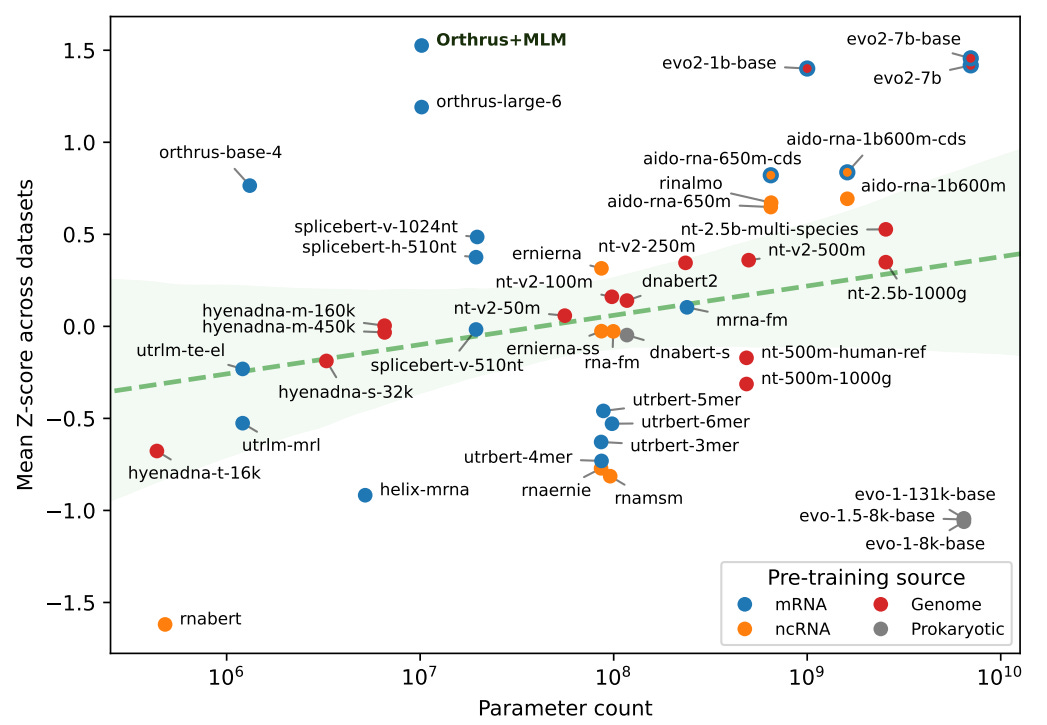

Recently, we’ve seen large-scale efforts to extend these trends to biology, including in the sub-domain of self-supervised nucleotide foundation modelling. Here, blockbuster models such as Evo, Evo2, and the AIDO family of DNA and RNA models boast billions of parameters, all trained on very large pre-training datasets. However, it’s unclear whether these huge models actually result in proportional increases in downstream performance on the biological tasks that folks care about. Several studies have shown mixed results for self-supervised foundation models, where classical deep learning approaches can beat them on various tasks [1, 2, 3] and simple baselines can sometimes outperform them both [4].

In a shameless plug, my collaborators and I put together a comprehensive benchmark for mRNA biology where we manage to beat out the behemoths with a tiny 10M parameter foundation model (Orthrus + MLM).

Now, I’m in the camp that believes these foundation models still have clear potential, but there’s a clear issue right now where the GPU hours we put into these models aren’t giving us as much performance as we would hope. In this blog, I’m going to dive into why we think scaling laws don’t (currently) work well in biological sequences, and whether we can find better pre-training objectives to fix this trend.

Modelling Nucleotides

When it comes to genomics, it’s tempting to model it directly as text. After all, there’s a well-defined vocabulary of nucleotides in DNA or RNA (A, C, G, T/U), which are then concatenated together into some string of characters that have some semantic meaning. Naively, you’d be tempted to throw it into your standard Transformer and apply your standard masked language modelling or causal language modelling objective (which is probably what happened in the first generation of these models).

Wishfully, you’d then be able to follow the GPT playbook: buy tons of GPUs, collect a bunch of unlabelled sequences, and then collect your all-seeing bio-model after it’s done baking. To me, this analogy breaks down in two ways:

The current pre-training objectives don’t leverage biological structures well.

We can’t brute force our way out, because we’re already effectively out of data.

While I’m on this soapbox, I’ll share some guesses on why these things happen.

On Biology

At some point in our lives, we’re all forced to learn biology against our will. Dear reader, today might just be that day.

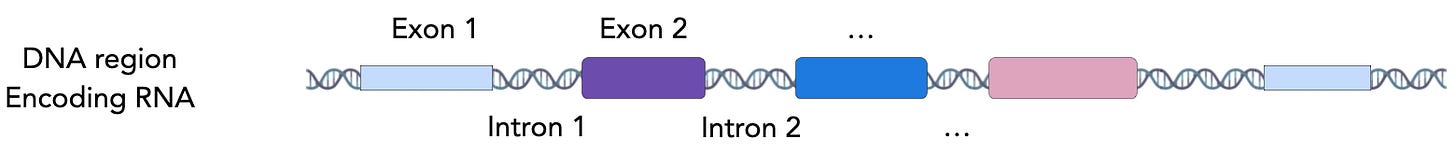

To understand the intuition behind why natural language isn’t a good proxy for genomic sequence, it’s worth taking a look at how information is structured in a (eukaryotic) organism.

The basic unit within genomics is the gene, which exists as a region of DNA in your genome. Each gene contains the instructions to make a specific protein, which carries out a specific task that helps an organism to keep the lights on for another day. These instructions encoded in the DNA are copied by mRNA molecules, and converted into proteins when the cell deems it appropriate.

The cell has many ways to control protein production, and the presence of this regulation can often be detected as specific patterns in DNA or RNA. When we train our foundation models on genomic data, we’re implicitly asking the model to learn these patterns. Just as predicting the next word in a sentence teaches GPT about how the grammar of English works, we might teach a foundation model about the grammar of biology by predicting the next nucleotide. At a high level, if you’ll take my word for it, these grammatical patterns should be inherently learnable.

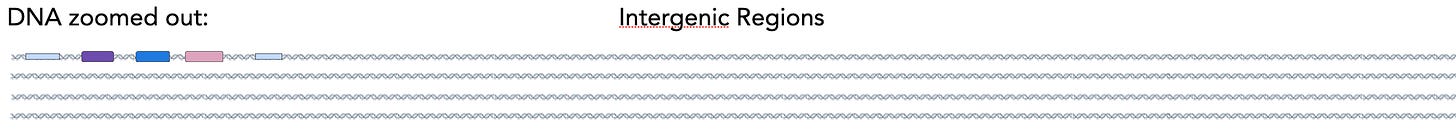

The core problem with modelling this biological grammar is how sparse in signal the genome is. In the human genome, ~25% of nucleotide positions in a genome are within gene regions, while just 2% of nucleotide positions make it into a protein product.

While the remaining regions may have pockets of importance, a large chunk of the genome can be thought of as noise. Naively training an LLM using typical strategies is like looking at one of those super old TVs with horrible reception that’s just picking up the fuzzy static stuff, and trying to guess the program that’s on.

Note: As with expositions into all complex subjects, lots of precision was glossed over in favour of the broader picture. For example, intronic or intergenic regions are not entirely signal free, but have been presented as such. These are the spherical cow assumptions in our space.

Seeing patterns in the TV Snow

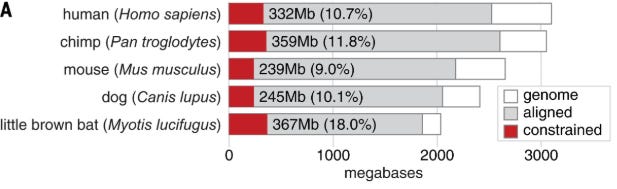

So how bad is this issue? One way to measure this is by quantifying the conservation of genomic positions across species. The intuition here is that, if a position in the nucleotide is important and has some function, mutation to another nucleotide will likely cause the organism to die. So, throughout evolution, these important positions remain unchanged, or are conserved.

In large scale analyses across all mammalian life, we find that only about 10% of positions in a genome are conserved, across species. Translating this back into the modelling space, when we naively pre-train models using causal language modelling, the next token we’re predicting doesn’t actually matter 9/10 times.

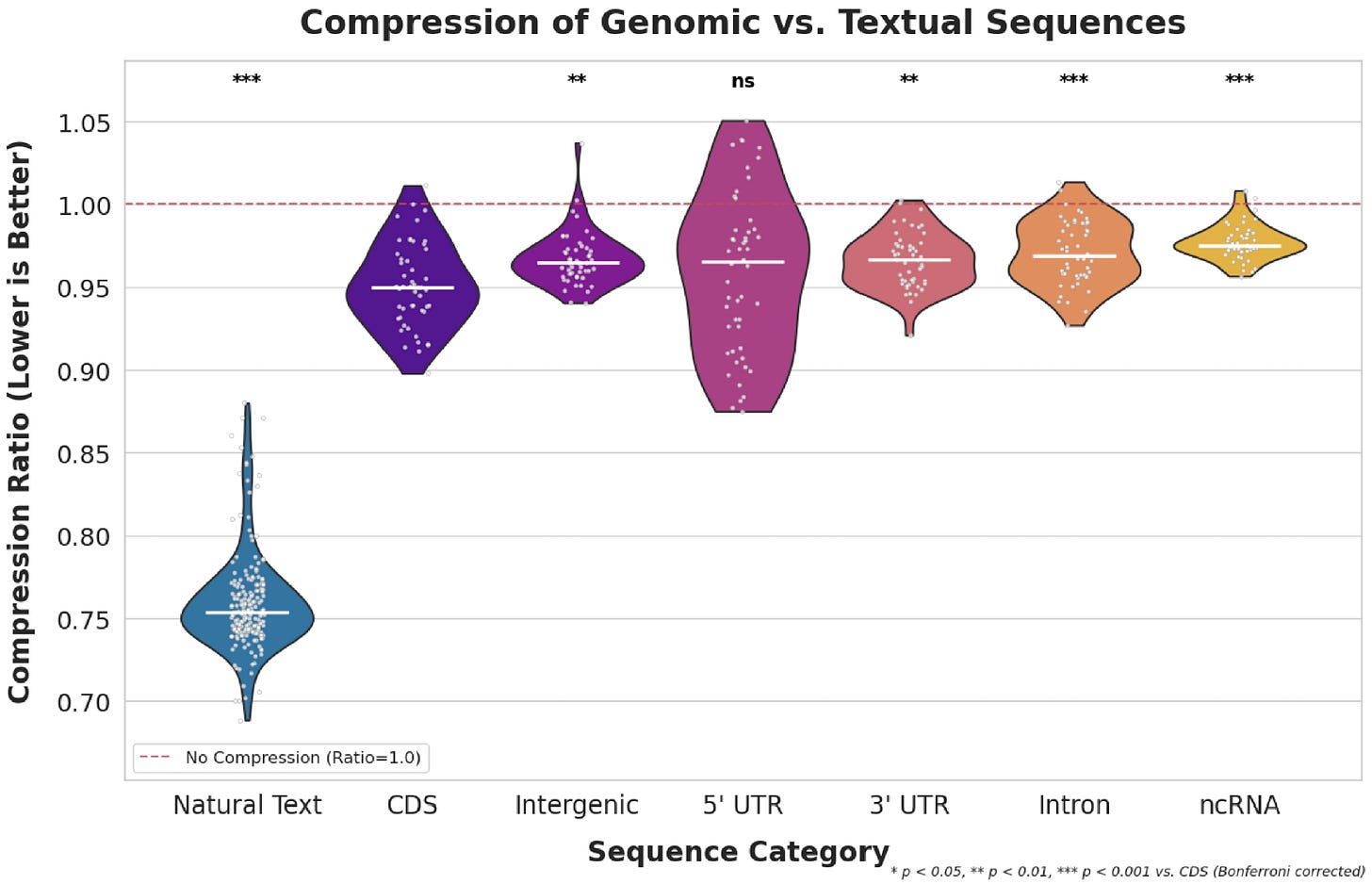

We can also investigate this issue from the perspective of compression — it’s thought that the performance of self-supervised foundation models in language and vision are closely tied to their ability to compress input data. In a super cool study, my collaborator Phil estimated the distributional differences between genomic data and natural language using a Huffman encoder.

The Huffman encoder compresses sequences by recognizing recurring patterns, which is a very simplistic approximation of what a foundation model winds up doing under the hood. Since genomic data is relatively unconserved, we see much less structure compared to natural language, ultimately resulting in a modality that’s much harder to represent. This problem is only exacerbated by the poor fit between the self-supervised objectives everyone’s using and biological structure. So really, scaling does work, the current approaches are just so sample inefficient we’re likely to run out of data and compute before we ever get anywhere meaningful.

Could we just scale our way out of the problem? Probably not, because:

We’re all outta data!

In many ways, the Evo2 manuscript is truly a monumental engineering effort. They’ve open sourced the OpenGenome2 dataset, which contains 8.8 trillion tokens from all trees of life, including bacteria, Archaea and Eukarya. While the corresponding Evo2 model that’s trained on this model is a pretty big breakthrough, I also have a hunch that it might represent a high water mark for how far brute force scaling on sequence alone can take us.

In terms of sequence data, I’d wager that OpenGenome2 is close to all the meaningful biological diversity that we can reasonably collect, and it falls short of the dataset sizes you see in language domains (Llama 3 was trained on 15T tokens). But let’s focus here on humans in particular, to highlight the unique challenges of biology.

With the cost of genomic sequencing falling super-exponentially, one might think you’d be able to just start going out there and sequencing everyone to create some “OpenHumanGenome” dataset. In fact, companies already leverage their diagnostics platforms to amass these datasets for use in clinical purposes. At a couple hundred bucks a pop, it’s not completely unrealistic to just sequence everyone in the world. The real issue is that you might not actually get that much data diversity from doing this.

Unfortunately, humans have particularly low genetic diversity, thanks to a population bottleneck in our recent past. Between any two individuals, they only have, on average, a difference of ~30 novel coding region nucleotide mutations between them out of the 3B nucleotides in an individual. In non-coding regions, this number grows, but still remains a tiny percentage of the genome. Essentially, individual genomes have high mutual information: once you’ve seen one person on a genomic level, you’ve basically seen them all. We’re likely not going to brute force our way out.

What comes next?

Are we, as the kids say, cooked? Honestly, I would think not. First of all, you can never discount what the folks at the Arc Institute and elsewhere are cooking up. It’s possible Evo3 drops in the near future and blows everything away simply by just adding more parameters.

However, assuming this doesn’t happen and I don’t have to delete this blog post in shame, I think the limitations of modelling biological sequence data actually represent an exciting opportunity. The data constraints and limited utility of scaling laws evoke the late 2010’s era of machine learning I came up in, before Attention Was All We Needed (for language and vision only apparently), where clever algorithmic tricks still offered performance differentiation.

While everyone has a take on the bitter lesson, my personal interpretation isn’t that we shouldn’t be baking in inductive biases into our model, but rather that the inductive biases should be able to actually leverage advances in compute. It’s not like the Transformer was devoid of human intuition — we baked in the attention mechanism, which in itself implies some human ascribed intuition about the differential and context-dependent importance of input tokens. Likewise, causal or masked language pre-training objectives also encode specific inductive biases.

The issue instead becomes finding the right architectures and pre-training objectives that uniquely allow for our foundation models to work with biological sequences. Shameless plug #2, Phil, Jonny and I are going through the YC S25 batch as Blank Bio, where we’re building out the next generation of RNA foundation models that do just that.

So, scaling is dead, but long live scaling.